Peek Inside the F1 Data Center

No cameras allowed, but learn how real-time data works for cars racing around a track at 200 mph.

It’s cold and wet for Sunday’s Formula 1 race at Austin, Texas, where the rainfall left over from Hurricane Patricia has led to more than 6 inches of water being dumped on the track. In a sport where rain is seen as the great equalizer where the traditional leaders can end up at the back of the pack for the start of the race, and have to fight their way up to the front, that didn’t happen as the Mercedes team’s two drivers locked in the top spot Sunday morning after a brief qualifying round.

This was after curtailed practice laps on Friday as the rain moved in, and qualifying rounds that were postponed indefinitely on Saturday. As of Sunday morning, the race is still scheduled to start at 2 pm local time, but if rain continues or conditions on the track worsen, the start would be postponed. John Morrison, the CTO of the Formula One Management, said that the race has been canceled only once before in Malaysia when the “water was ankle-deep on the track,” and in that case it wasn’t exactly canceled as it was postponed until it was effectively canceled because the teams had to move out for the next race.

Unfortunately for the F1 organization, the next stop is Mexico City, where the organizers are worried about sparse attendance after the country’s western coast was hit by Patricia. The rain is the big story from this race, but Fortune was there to see behind the scenes, where incredible amounts of data coming from the cars was sent, analyzed and then packaged immediately into a broadcast. Fortunately it was dry. Unfortunately, F1 didn’t allow cameras.

A practice run before it started raining.S. Higginbotham for Fortune. The F1 data center is a grey, steel and plastic temporary structure that sits outside the track and is home to about 200 people during the 3 days of a race. It takes about a two days to set up the structure and the offices and equipment inside it, but only about 3 hours to take it down, where it’s then loaded on 15 trucks and transported to the next race if the race is in Europe, or to the airport if it is in the Americas. In the Americas the gear is loaded onto a jumbo jet and flown to the next race.

In the Austin data center the servers and broadcasting equipment all sat on air pallets so it could easily transition from data center to truck to aircraft and back again. Morrison, the Formula One CTO, said that since each jumbo jet can carry 130 tons, he kept all of the gear under that weight limit to avoid needing to split up the gear and create more logistical drama. So far, he’s managed.

As for why a car race might need its own portable data center with 130 tons of gear, F1 is arguably the most data-driven sport out there. Each car is outfitted with roughly 150 sensors generating about 2,000 data points a minute. For years, the rules for F1 have limited teams in terms of their time in wind tunnels and in the not-so-distant past, their supercomputing hours used. Even today, they are limited in the number of engineers located on site as opposed to those at their offices back home.

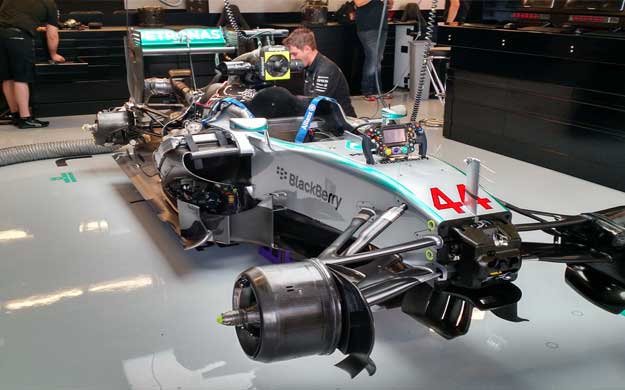

One of the Mercedes cars. A car can have about 150 sensors on it.S. Higginbotham for Fortune.

Which is why in the last three years the Formula One Management team has worked with Tata Communications to boost connectivity at the track by tenfold from 100 Megabits per second to at least a 1 Gbps at all tracks to handle all of the data needs. Mehul Kapadia, managing director of the F1 business with Tata, says that now engineers onsite can pull data from their cars and offer insights, but engineers at the teams headquarters in Europe can also analyze that data in what amounts to real time to tell drivers how to handle conditions like a flooded track.

There’s about 20 kilometers of fiber distributed at each track in ducts that gets pulled up when the race moves on because as Morrison said, “It would be trashed, or in Brazil it would be nicked.” Everything is managed from this grey dome next to track, where one can see the race cars whizzing around the track from cameras, or in the form of numbers generated by a sensor on the car passing over sensors on the track designed to pick up the exact position and timing for each car. All of the software is written by Morrison’s team.

From there the F1 broadcast is managed as is the network connection for the fan’s Wi-Fi, the drivers and the entire race.

F1 on Real Time

The result of all this connectivity, rapid-fire data center construction and technology is a race experience puts most real-time data analytics efforts to shame. As Kapadia said, “These guys can tell a driver he needs to take his car a little to the right to save one-tenth of a second on a particular turn, and in a race like this, that can be the difference between winning and losing.”

Source: http://fortune.com/2015/10/25/f1-austin-data-center/